Search Bench

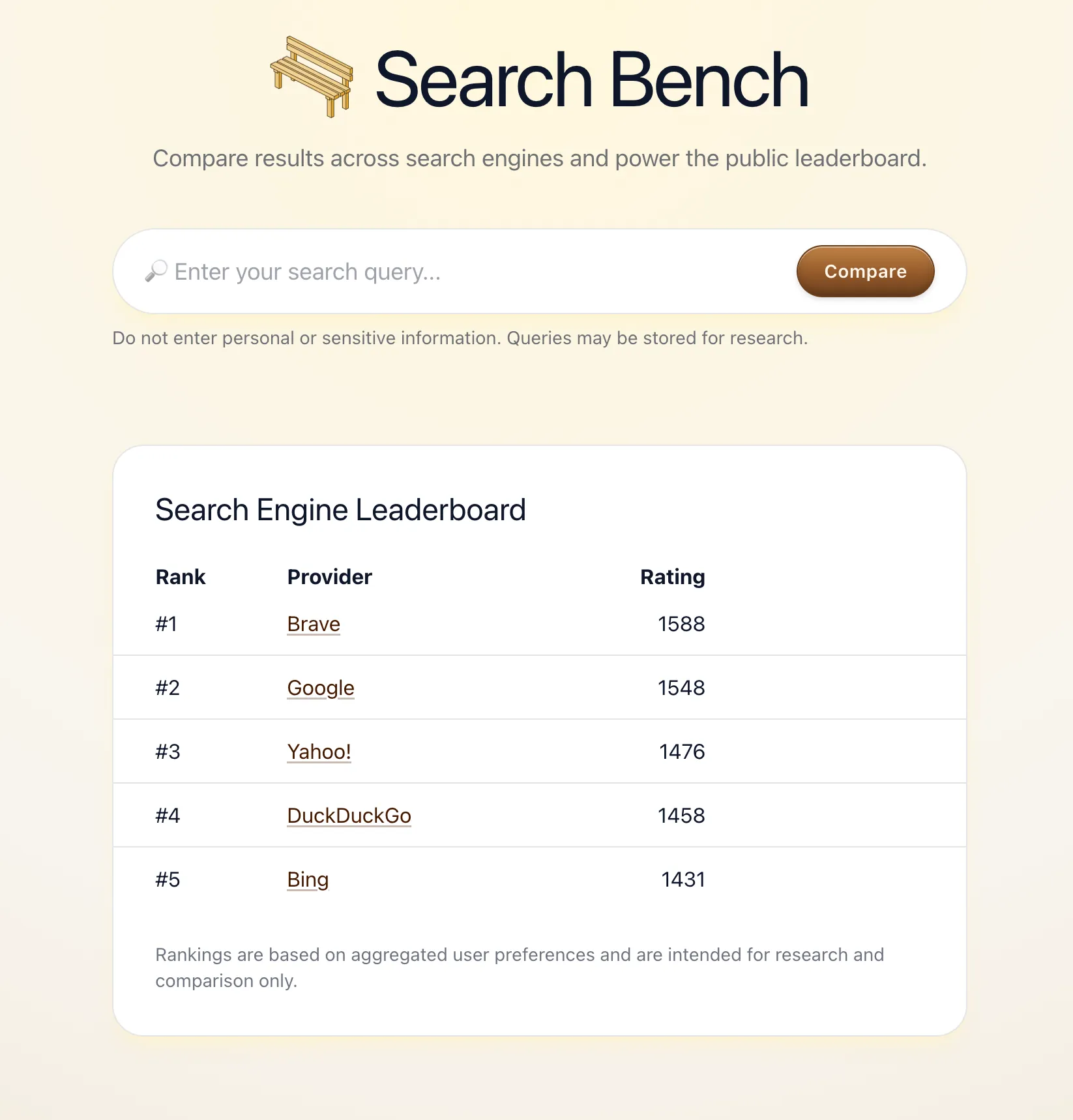

Search Bench is a community-driven experiment that compares search engines without showing which engine produced which results. Inspired by LLM Arena, it presents two anonymous result sets side-by-side, asks you to pick which is better (or mark them as similar), and aggregates those blind votes into a public leaderboard.

The rankings are built from pairwise comparisons using a Bradley–Terry model. Ties count as half wins, ability scores are updated iteratively and normalized by geometric mean, and the final scores are log-scaled to create an ELO-like scale. Matchups are selected adaptively to prioritize under-sampled engines and close calls via an uncertainty × closeness weighting.

SearchBench is intentionally not an objective ranking: queries and voters are self-selected, results vary by context, and “better” is subjective. The goal is to learn whether removing brand bias reveals a real quality signal at scale, and how the signal shifts as more independent voters contribute.

Technologies used:

- Next.js,

- Tailwind CSS,

- SQLite.